UltraSense brings Neural Processing to Smart Surfaces

- December 21, 2021

- William Payne

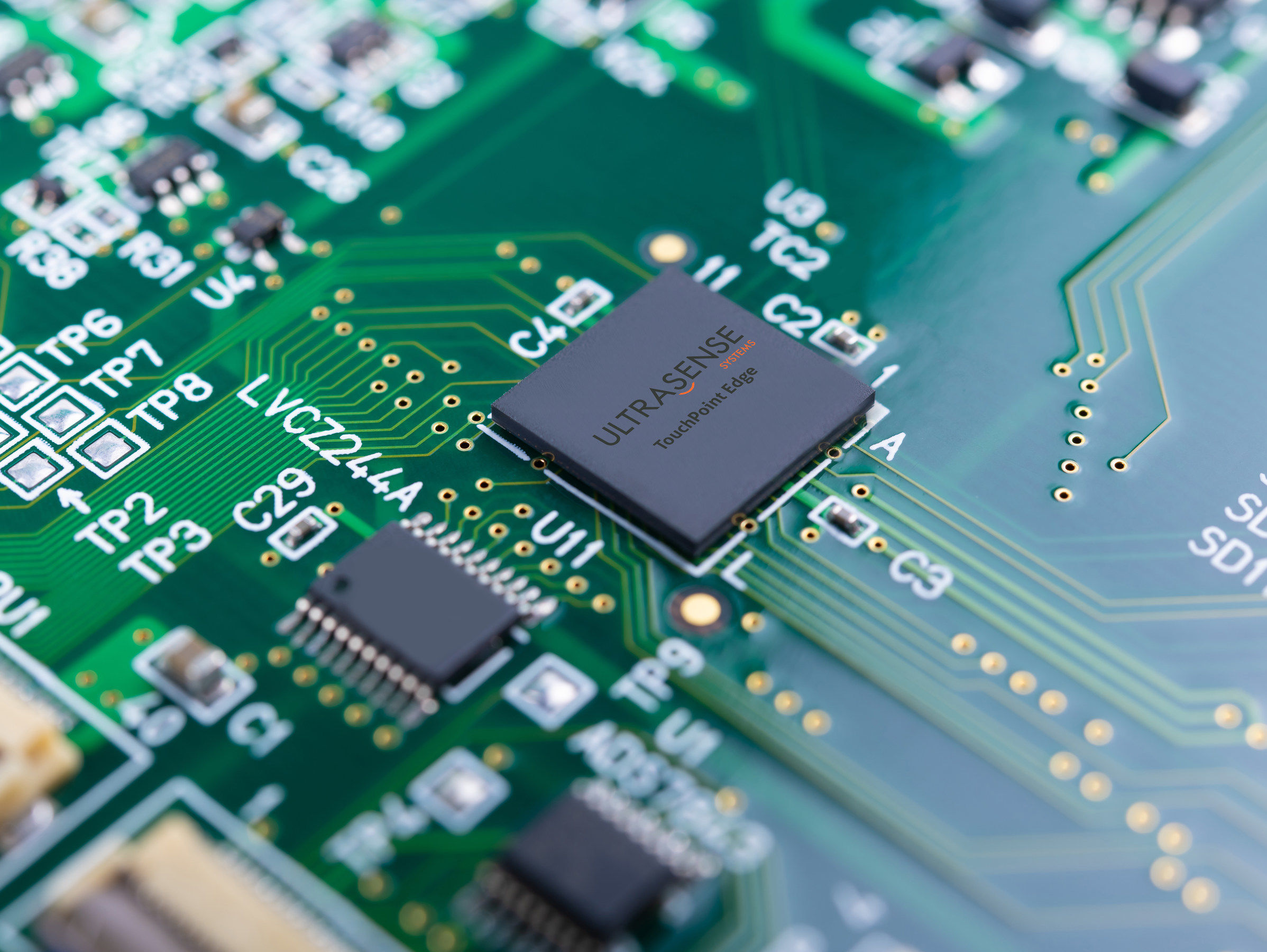

San Jose based UI/UX specialist UltraSense Systems has released TouchPoint Edge, replacing mechanical buttons under any surface material including metal, glass, and plastic, with multi-mode touch sensing technology.

TouchPoint Edge is an integrated system-on-a-chip (SoC) which replicates the touch input of mechanical buttons by directly sensing up to eight standalone UltraSense ultrasound + force sensing TouchPoint P transducers. TouchPoint Edge uses an embedded, always-on Neural Touch Engine (NTE) to discern intended touches from possible unintended false touches, eliminating corner cases and providing input accuracy of a mechanical button.

A smart surface is a solid surface with underside illumination to show the user where to touch. The UI/UX shift started with the smartphone over a decade ago with the removal of the mechanical keyboard to tapping the keyboard on a capacitive screen.

TouchPoint Edge is designed to take smart surfaces into applications that use many mechanical buttons. The company instances the automobile cockpit which have many use cases including removing mechanical buttons in the steering wheel, centre and overhead console controls for HVAC and lighting, door panels for seating and window controls and even embedded into soft surfaces like leather or even in foam seating to create new user interfaces where mechanical buttons could not be implemented before. Other applications include appliance touch panels, smart locks, security access control panels, elevator button panels and a multitude of other applications.

TouchPoint Edge, with multi-mode sensing and embedded Neural Touch Engine, processes on-chip machine learning and neural network algorithms, so the user intention can be learned. TouchPoint Edge captures the pattern of the user’s press with respect to the surface material. The data set is then used to train the neural network to learn and discern the user’s press pattern, unlike algorithms which accept a single force threshold. Once TouchPoint Edge is trained and optimised to a user’s press pattern, the natural response of a button press can be recognised.

The sensor array design of the TouchPoint P transducer allows for the capture of multi-channel data sets within a small, localised area, as a mechanical button would be located, improving the performance of the neural network to replicate a button press.

The Neural Touch Engine integrated into TouchPoint Edge improves system efficiency with neural processing able to be performed 27X faster with 80% less power compared to offloading the same system setup to an external ultra-low-power microcontroller.

“In just three years from first funding, we were able to develop, qualify and ship to OEMs and ODMs a fully integrated virtual button solution for smart surfaces,” said Mo Maghsoudnia, CEO of UltraSense Systems. “We are the only multi-mode sensor solution for smart surfaces, designed from the ground up to put neural touch processing into everything from battery-powered devices to consumer/industrial IoT products and now automotive in a big way.”

“The challenges of replacing traditional mechanical buttons with sensor-based solutions requires technologies such as illumination of the solid surface, ultrasound or capacitive sensing, and force sensing,” said Nina Turner, research manager of IDC. “But those sensors alone can lead to false positives. The integration of machine learning integrated with these touch sensors brings a new level of intelligence to the touch sensor market and would be beneficial in a wide array of devices and markets.”