MIT, IBM develop microcontroller AI training

- October 5, 2022

- William Payne

Researchers at MIT and the MIT-IBM Watson AI Lab have developed a machine learning technique that enables training on an industrial microcontroller using less than a quarter of a megabyte of memory. This compares with other training solutions designed for connected devices that can use more than 500 megabytes of memory, greatly exceeding the 256-kilobyte capacity of most microcontrollers.

Microcontrollers are at the heart of industrial OT and IoT systems. But cheap, low-power microcontrollers have extremely limited memory and no operating system, making it challenging to train artificial intelligence models on “edge devices” that work independently from central computing resources.

Today, most AI training for OT and industrial IoT networks is performed in the cloud or a data centre, before the trained model is transferred back to the edge device. This is more costly and raises privacy and data security issues since operational data must be sent to a central server.

MIT and IBM researchers have developed algorithms and a framework to reduce the amount of computation required to train a model, which makes the process faster and more memory efficient. The MIT-IBM technique can be used to train a machine-learning model on a microcontroller in a matter of minutes.

This technique also helps preserve privacy and data security by keeping data on the device. This makes this model applicable not just for industrial environments but medical as well, where data can be especially sensitive.

“Our study enables IoT devices to not only perform inference but also continuously update the AI models to newly collected data, paving the way for lifelong on-device learning. The low resource utilisation makes deep learning more accessible and can have a broader reach, especially for low-power edge devices,” said Song Han, an associate professor in the Department of Electrical Engineering and Computer Science (EECS), a member of the MIT-IBM Watson AI Lab, and senior author of the paper describing this innovation.

Han and his team previously addressed the memory and computational bottlenecks that exist when trying to run machine-learning models on tiny edge devices, as part of their TinyML initiative.

Han and his collaborators employed two algorithmic solutions to make the training process more efficient and less memory-intensive. The first, known as sparse update, uses an algorithm that identifies the most important weights to update at each round of training. The algorithm starts freezing the weights one at a time until it sees the accuracy dip to a set threshold, then it stops. The remaining weights are updated, while the activations corresponding to the frozen weights don’t need to be stored in memory.

“Updating the whole model is very expensive because there are a lot of activations, so people tend to update only the last layer, but as you can imagine, this hurts the accuracy. For our method, we selectively update those important weights and make sure the accuracy is fully preserved,” Han said.

Their second solution involves quantised training and simplifying the weights, which are typically 32 bits. An algorithm rounds the weights so they are only eight bits, through a process known as quantisation, which cuts the amount of memory for both training and inference. Inference is the process of applying a model to a dataset and generating a prediction. Then the algorithm applies a technique called quantisation-aware scaling (QAS), which acts like a multiplier to adjust the ratio between weight and gradient, to avoid any drop in accuracy that may come from quantised training.

The researchers developed a system, called a tiny training engine, that can run these algorithmic innovations on a simple microcontroller that lacks an operating system. This system changes the order of steps in the training process so more work is completed in the compilation stage, before the model is deployed on the edge device.

“We push a lot of the computation, such as auto-differentiation and graph optimisation, to compile time. We also aggressively prune the redundant operators to support sparse updates. Once at runtime, we have much less workload to do on the device,” Han said.

Their optimisation only required 157 kilobytes of memory to train a machine-learning model on a microcontroller, whereas other techniques designed for lightweight training would still need between 300 and 600 megabytes.

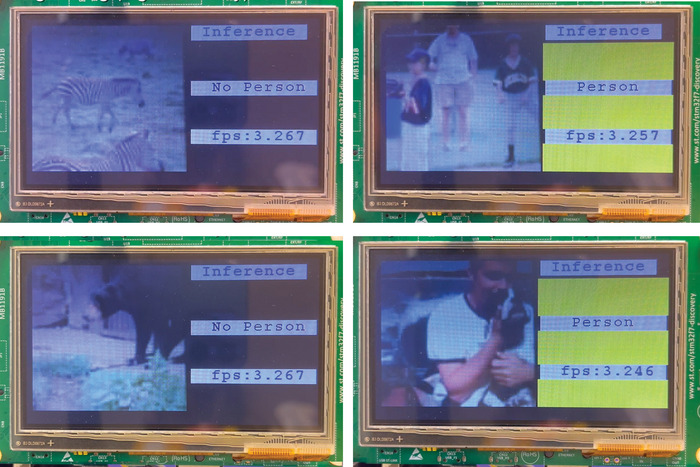

They tested their framework by training a computer vision model to detect people in images. After only 10 minutes of training, it learned to complete the task successfully. Their method was able to train a model more than 20 times faster than other approaches.

Now that they have demonstrated the success of these techniques for computer vision models, the researchers want to apply them to language models and different types of data, such as time-series data. At the same time, they want to use what they’ve learned to shrink the size of larger models without sacrificing accuracy, which could help reduce the carbon footprint of training large-scale machine-learning models.

“AI model adaptation/training on a device, especially on embedded controllers, is an open challenge. This research from MIT has not only successfully demonstrated the capabilities, but also opened up new possibilities for privacy-preserving device personalisation in real-time,” said Nilesh Jain, a principal engineer at Intel who was not involved with this work. “Innovations in the publication have broader applicability and will ignite new systems-algorithm co-design research.”

“On-device learning is the next major advance we are working toward for the connected intelligent edge. Professor Song Han’s group has shown great progress in demonstrating the effectiveness of edge devices for training,” said Jilei Hou, vice president and head of AI research at Qualcomm. “Qualcomm has awarded his team an Innovation Fellowship for further innovation and advancement in this area.”