NXP boosts edge image processing with Kinara

- September 20, 2022

- William Payne

NXP has added high performance inferencing to its AI-enabled products through a collaboration with Kinara. An immediate result of this collaboration is that NXP’s i.MX processors now have enhanced computer vision analytics for industrial IoT, as well as smart city and smart retail applications.

Kinara is an edge computing inferencing specialist. The company produces processors optimised for inferencing, or the “production” stage of deep learning. AI chips typically balance training and inferencing requirements. In production environments such as industrial edge analytics, inference processing is typically the main workload.

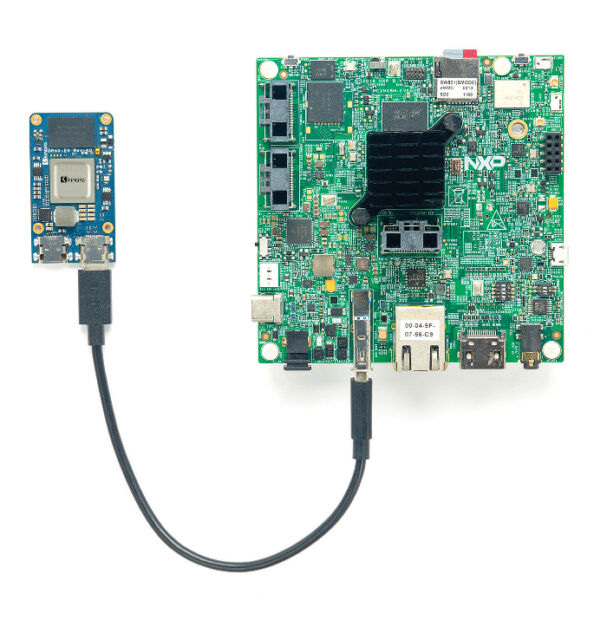

The collaboration between NXP and Kinara sees the latter’s Ara-1 edge AI processors paired with NXP’s i.MX applications processors, as well as other NXP AI-enabled processors.

“Our processing solutions and AI software stacks enable a very wide range of AI performance requirements – this is a necessity given our extremely broad customer base,” said Joe Yu, Vice President and General Manager, IoT Edge Processing, NXP Semiconductors. “By working with Kinara to help satisfy our customer’s requirements at the highest end of edge AI processing, we will bring high performance AI to smart retail, smart city, and industrial markets.”

“We see two general trends with our Edge AI customers. One trend is a shift towards a Kinara solution that significantly reduces the cost and energy of their current platforms that use a traditional GPU for AI acceleration. The other trend calls for replacing Edge AI accelerators from well-known brands with Kinara’s Ara-1 allowing the customer to achieve at least a 4x performance improvement at the same or better price,” said Ravi Annavajjhala, CEO, Kinara. “Our collaboration with NXP will allow us to offer very compelling system-level solutions that include commercial-grade Linux and driver support that complements the end-to-end inference pipeline.”

“Intelligent vision processing is an exploding market that is a natural fit for machine learning. But vision systems are getting increasingly complex, with more and larger sensors, and model sizes are growing. To keep pace with these trends requires dedicated AI accelerators that can handle the processing load efficiently – both in power and silicon area,” said Kevin Krewell, principal analyst at TIRIAS Research. “The best modular approach to vision systems is a combination of an established embedded processor and a power-efficient AI accelerator, like the combination of NXP’s i.MX family of embedded applications processors and the Kinara AI accelerator.”

NXP’s AI processing solutions encompass its microcontrollers (MCUs), i.MX RT series of crossover MCUs and i.MX applications processor families, which represent a variety of multicore solutions for multimedia and display applications.

NXP’s portfolio covers a range of AI processing needs natively. For use cases that require higher performance AI due to increases in frame rates, image resolution, and number of sensors, the demand can be accommodated by integrating NXP processors with Kinara’s Ara-1 allowing customers to scale up and partition the AI workload between the NXP device and the Ara-1, while maintaining a common application software running on the NXP processors.